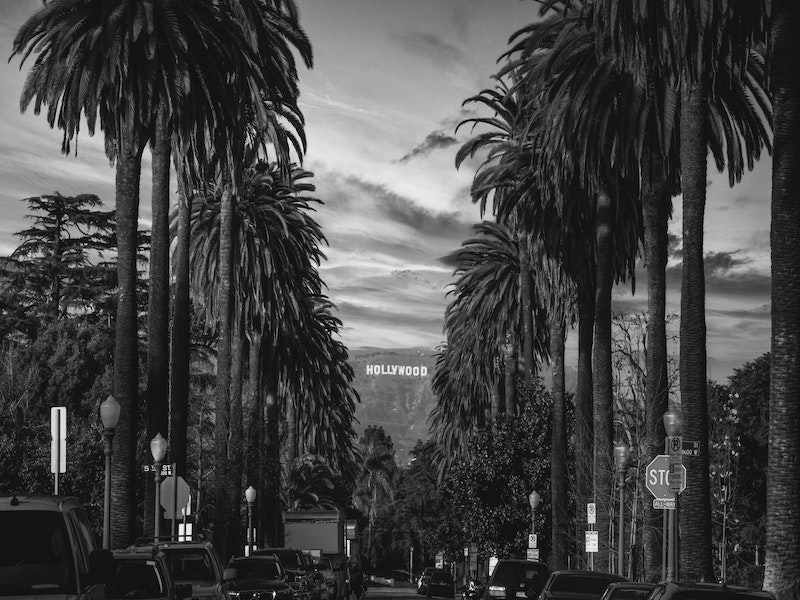

Has Hollywoods Obsession With the Nazis Made Racism Worse? The pursuit of profits means the social impact of the film industry is neglected. Hollywood has a moral responsibility to do better

World War II ended 75 years ago, you wouldn’t think it when Hollywood continues to churn out films on the subject. As the villains of the piece, the Nazis are front…